In the rapidly evolving landscape of software development and deployment, two major technologies have emerged as game-changers: Kubernetes and Artificial Intelligence (AI). Kubernetes, the open-source container orchestration platform, has become the de facto standard for managing containerized applications at scale.

In the rapidly evolving landscape of software development and deployment, two major technologies have emerged as game-changers: Kubernetes and Artificial

In the rapidly evolving landscape of software development and deployment, two major technologies have emerged as game-changers: Kubernetes and Artificial Intelligence (AI). Kubernetes, the open-source container orchestration platform, has become the de facto standard for managing containerized applications at scale. On the other hand, AI, with its transformative potential across industries, is increasingly becoming a core component of modern applications. The convergence of Kubernetes and AI is not just a technological trend but a significant shift towards more scalable, efficient, and intelligent software systems.

The Role of Kubernetes in Modern Software Development

Kubernetes has revolutionized the way we deploy and manage applications. It provides a robust platform for automating the deployment, scaling, and operation of application containers across clusters of hosts. With features like self-healing, load balancing, and automated rollouts and rollbacks, Kubernetes ensures that applications run smoothly and efficiently, even in complex and large-scale environments.

One of the key reasons Kubernetes has gained such widespread adoption is its ability to abstract the underlying infrastructure, allowing developers to focus on application logic rather than the complexities of the environment. This level of abstraction is crucial in today’s multi-cloud and hybrid environments, where applications need to run consistently across different infrastructures.

The Rise of AI in Software Systems

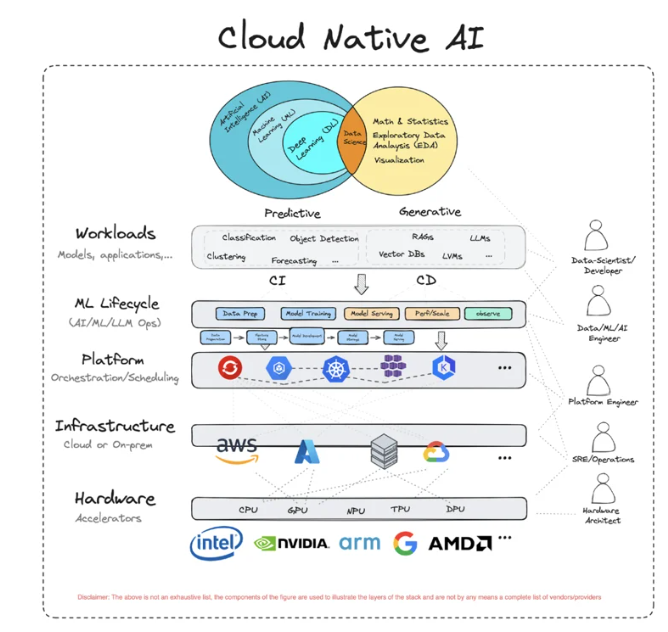

Artificial Intelligence has transitioned from a buzzword to a critical component of modern software systems. AI-powered applications, from recommendation engines to predictive analytics and autonomous systems, are becoming increasingly prevalent across industries. The ability to process vast amounts of data, learn from it, and make intelligent decisions is what makes AI so powerful.

However, deploying AI models at scale presents its own set of challenges. These include managing the lifecycle of AI models, scaling machine learning workloads, ensuring reproducibility, and integrating AI models with existing applications. This is where the synergy between Kubernetes and AI comes into play.

How Kubernetes Empowers AI Workloads

Kubernetes provides an ideal platform for running AI workloads due to its scalability, flexibility, and support for distributed computing. Here’s how Kubernetes empowers AI:

- Scalability and Resource Management: AI workloads, particularly deep learning models, require significant computational resources. Kubernetes allows you to scale these workloads across multiple nodes, ensuring efficient use of resources. With Kubernetes’ resource management capabilities, you can allocate CPU, memory, and GPU resources as needed, ensuring that AI models run optimally.

- Automated Deployment and CI/CD: Kubernetes enables continuous integration and continuous deployment (CI/CD) pipelines for AI models. This means that AI models can be automatically deployed, updated, and scaled without manual intervention. By integrating with CI/CD tools, Kubernetes ensures that AI models are always up-to-date and can be quickly rolled back in case of issues.

- Hybrid and Multi-Cloud Deployments: AI workloads often need to be deployed across different cloud environments or on-premises data centers. Kubernetes provides a consistent platform that abstracts away the underlying infrastructure, making it easier to deploy AI models in hybrid or multi-cloud environments. This flexibility is crucial for organizations that need to leverage different types of infrastructure for their AI workloads.

- Support for Distributed Training: Training AI models, especially deep learning models, often requires distributed computing across multiple machines. Kubernetes supports distributed training frameworks like TensorFlow, PyTorch, and Apache Spark, enabling large-scale training jobs to run efficiently across clusters.

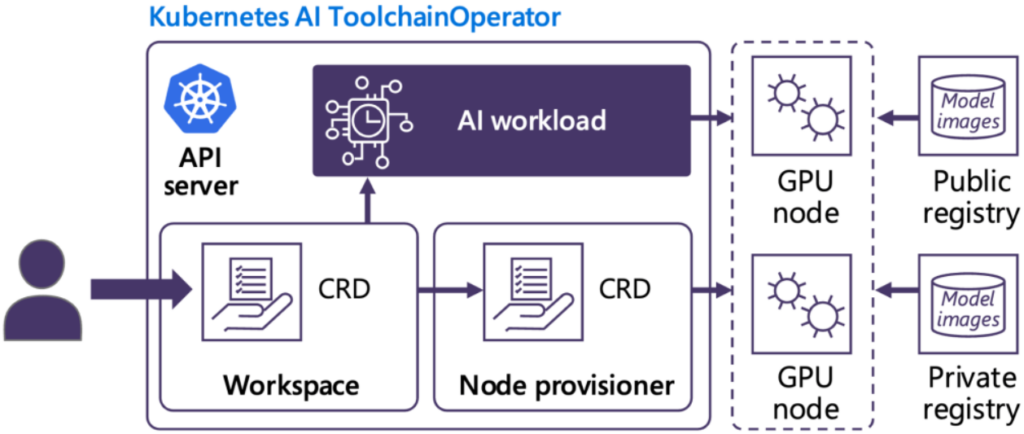

- Model Serving and Inference: Once AI models are trained, they need to be deployed for inference. Kubernetes, with its support for microservices architecture, allows AI models to be deployed as scalable services. Tools like Kubeflow’s, a machine learning toolkit for Kubernetes, further streamline the process of deploying, managing, and scaling AI models in production.

Kubeflow’s: Bridging Kubernetes and AI

Kubeflow has emerged as a critical tool in the convergence of Kubernetes and AI. It is an open-source platform that runs on Kubernetes and provides a set of tools for developing, orchestrating, deploying, and running scalable and portable machine learning workloads. Kubeflow’s allows data scientists and developers to create end-to-end machine learning pipelines that are portable and can be deployed across different environments.

With Kubeflow’s, organizations can:

- Standardize Machine Learning Workflows: Kubeflow’s provides a consistent platform for creating, deploying, and managing machine learning workflows. This standardization reduces the complexity and variability that often comes with deploying AI models.

- Leverage Kubernetes’ Scalability: By running on Kubernetes, Kubeflow’s inherits all the scalability and resource management benefits of the underlying platform. This makes it easier to scale AI workloads as needed.

- Integrate with Existing Kubernetes Ecosystem: Kubeflow integrates seamlessly with the broader Kubernetes ecosystem, including monitoring, logging, and security tools. This integration ensures that AI workloads are not only scalable but also secure and observable

Real-World Applications and Benefits

The convergence of Kubernetes and AI is already driving innovation across various industries. For instance, in the healthcare sector, AI models for diagnostics and patient care can be deployed at scale, enabling faster and more accurate medical decisions. In finance, AI-driven fraud detection systems can be scaled to handle vast amounts of transaction data in real-time. In retail, personalized recommendation engines powered by AI can be deployed across global e-commerce platforms, providing a tailored shopping experience for millions of users.

The benefits of this convergence are manifold:

- Increased Efficiency: Automated deployment and scaling of AI models lead to more efficient use of resources, reducing operational costs and improving performance.

- Faster Time to Market: With Kubernetes, organizations can deploy AI models faster, allowing them to quickly adapt to market changes and customer needs.

- Enhanced Collaboration: The standardization of AI workflows on Kubernetes enables better collaboration between data scientists, developers, and operations teams.

- Future-Proofing AI Investments: By leveraging Kubernetes, organizations can ensure that their AI investments are scalable, portable, and adaptable to future technological advancements.

Conclusion

The convergence of Kubernetes and AI marks a new era in software development and deployment. Kubernetes provides the scalability, flexibility, and automation needed to manage AI workloads effectively, while AI brings intelligence and predictive capabilities to modern applications. Together, they are enabling organizations to build more robust, scalable, and intelligent systems that can meet the demands of the future.

As these technologies continue to evolve, we can expect to see even more powerful integrations, driving further innovation and transforming industries across the board. Whether you’re a developer, data scientist, or IT operations professional, understanding the synergy between Kubernetes and AI is crucial for staying ahead in the rapidly changing tech landscape.